AI systems are transforming our world in many ways, from healthcare to entertainment to education. But how do we align Artificial Intelligent (AI) Systems with diverse human values? Open AI’s Grant Program is an attempt at exactly this!

Ethical guidelines are not just rules or regulations, but rather principles and values that can guide the development and use of AI systems in a responsible and beneficial way.

In this post, I will tell you about an exciting opportunity to get involved in shaping the ethical guidelines for AI systems. I will also tell you about some of the existing proposals and frameworks for ethical guidelines for AI systems that are human-centric.

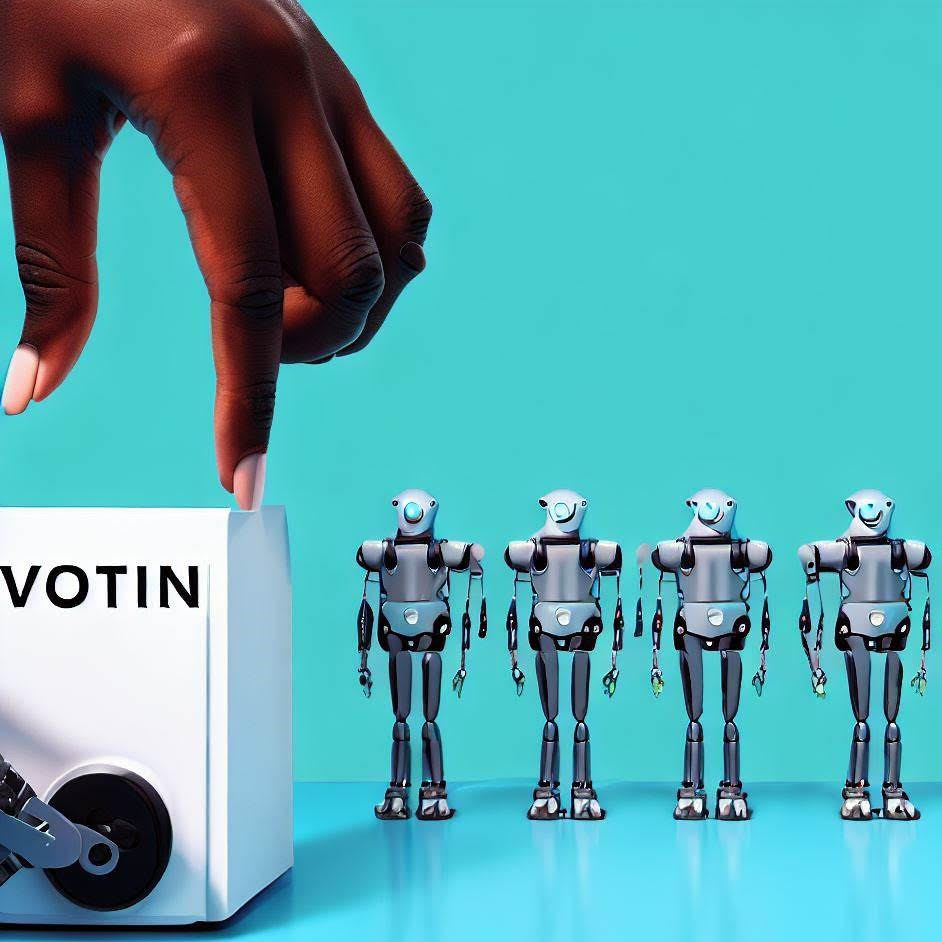

OpenAI Launches Grants for Democratic Processes for AI

OpenAI, Inc., a nonprofit organization that aims to create safe and beneficial artificial intelligence (AI) for humanity, has announced a new program to fund experiments in setting up a democratic process for deciding what rules AI systems should follow.

The program will award ten $100,000 grants to anyone who has an idea for how to implement such a process, whether they are researchers, activists, artists, or entrepreneurs. The goal is to fund experimentation with methods for gathering nuanced feedback from everyone affected by AI systems and translating that feedback into actionable guidelines for AI developers and users.

The deadline for submitting proposals is June 30, 2023. The proposals will be evaluated by a panel of experts from various fields and backgrounds. The selected grantees will have one year to conduct their experiments and report their findings and recommendations to OpenAI and the public.

The program is part of OpenAI’s effort to align AI systems with human values and diversity. The organization believes that AI should benefit everyone and not just a few, and that everyone should have a say in how AI is developed and used.

This program seems to be inspired by the idea of “Democratic Responsibility in the Digital Public Sphere“, proposed by Professor Joshua Cohen in a paper published in April 2023. Cohen argued that AI systems should be subject to public deliberation and accountability, and that democratic processes can help ensure that AI systems respect human rights and dignity.

OpenAI hopes that this program will inspire more dialogue and collaboration among different stakeholders and communities on how to shape the future of AI in a way that respects human values and diversity.

Benefits of OpenAI’s Approach

OpenAI’s program is a commendable attempt to address the ethical challenges posed by AI systems. As AI systems become more powerful and pervasive, they will have significant impacts on various aspects of human life, such as privacy, security, justice, and democracy. Therefore, it is crucial that AI systems are designed and deployed in a way that respects human values and diversity, and that they are subject to public oversight and accountability.

However, achieving this goal is not easy. There are many uncertainties and complexities involved in defining and measuring human values and diversity, as well as in translating them into concrete rules and standards for AI systems. Moreover, there are many different stakeholders and communities that have different perspectives and interests in relation to AI systems, and that may not have equal access or influence in shaping them.

That is why OpenAI’s program is a welcome initiative. Inviting anyone with an idea for how to set up a democratic process for deciding what rules AI systems should follow, OpenAI is opening up the space for experimentation and innovation in this domain. Funding experiments that involve gathering nuanced feedback from everyone affected by AI systems, OpenAI is promoting the inclusion and participation of diverse voices and views in the design and use of AI systems. Translating that feedback into actionable guidelines for AI developers and users, OpenAI is facilitating the alignment of AI systems with human values and diversity.

OpenAI’s program is an example of how AI can be developed and used in a way that is consistent with a democratic view of ethics. A democratic view of ethics holds that ethical decisions should be made through a process of public deliberation and accountability, rather than by a few experts or authorities. A democratic view of ethics also recognizes that ethical decisions are not fixed or final, but rather subject to ongoing revision and improvement based on new evidence and arguments.

By launching this program, OpenAI is showing its commitment to creating safe and beneficial AI for humanity. It is also showing its respect for the dignity and autonomy of human beings as the ultimate source and judge of ethical values.

Concerns with OpenAI’s Metaethical Analysis

However, OpenAI’s program is not without its flaws. One of the main criticisms that can be raised against it is that it relies on a metaethical perspective that falls prey to the ad populum fallacy. The ad populum fallacy is a logical error that occurs when one argues that something is true or good simply because many people believe or agree with it.

OpenAI’s program assumes that human values and diversity can be determined by aggregating the opinions and preferences of everyone affected by AI systems. It also assumes that these opinions and preferences are valid and reliable sources of ethical guidance for AI systems. However, these assumptions are questionable. Just because many people believe or agree with something does not mean that it is true or good. People can be mistaken, biased, ignorant, or manipulated by various factors that influence their judgments and choices. Moreover, people can have conflicting or incompatible values and interests, which may not be easily reconciled or harmonized by a democratic process.

Therefore, OpenAI’s program may not be able to capture the true or best ethical values and standards for AI systems. It may end up endorsing or imposing values and standards that are based on false or dubious beliefs, or that favor some groups or interests over others. It may also fail to address some of the deeper or more fundamental ethical questions and challenges that AI systems pose, such as the nature and purpose of human existence, the moral status and rights of non-human entities, or the limits and responsibilities of human agency.

OpenAI’s program may be a well-intentioned attempt to align AI systems with human values and diversity, but it may not be sufficient or satisfactory from a metaethical perspective. It will need to be complemented or revised by other approaches that can provide more rigorous and robust ethical foundations and frameworks for AI systems.

Creating Human-Centric AI

So, how can we create artificial intelligence that is human-centric? How can we ensure that AI systems are aligned with human values and diversity, while also respecting the law and the common good? How can we balance the benefits and risks of AI systems for humanity and the environment?

These are some of the questions that need to be addressed by ethical guidelines for AI systems. Ethical guidelines are not just rules or regulations, but rather principles and values that can guide the development and use of AI systems in a responsible and beneficial way.

There are many different proposals and frameworks for ethical guidelines for AI systems, such as the Asilomar AI Principles, the IEEE Ethically Aligned Design, the EU High-Level Expert Group on AI Ethics Guidelines, or Rome’s Call For AI Ethics. However, there are some common elements and themes that can be found in most of them.

Here are some examples of ethical guidelines for AI systems that are human-centric:

- AI systems should respect human dignity and autonomy. They should not harm or exploit human beings or violate their rights and freedoms. They should also allow human beings to exercise control and choice over their interactions with AI systems, and to opt out or withdraw from them if they wish.

- AI systems should promote human well-being and social good. They should contribute to the improvement of human health, education, culture, and quality of life. They should also support social justice, inclusion, and diversity, and avoid discrimination, bias, or unfairness.

- AI systems should be transparent and accountable. They should provide clear and accurate information about their capabilities, limitations, purposes, and impacts. They should also be subject to oversight and audit by human authorities and be held liable for any harm or damage they cause.

- AI systems should be reliable and secure. They should function as intended and expected, and be resilient to errors, failures, or attacks. They should also protect the privacy and security of human data and information and prevent unauthorized access or misuse.

- AI systems should be sustainable and environmentally friendly. They should minimize their negative impacts on the environment and natural resources and maximize their positive contributions to ecological balance and biodiversity.

These are some of the ethical guidelines that can help us create artificial intelligence that is human-centric. Of course, these guidelines are not exhaustive or definitive, but rather indicative and flexible. They may need to be adapted or revised according to different contexts and situations. They also need to be complemented by other methods or mechanisms that can ensure their implementation and enforcement.

However, these guidelines can provide a useful starting point and a common reference for anyone who is involved in or affected by AI systems. They can help us foster a culture of ethical awareness and responsibility among AI developers and users. They can also help us engage in constructive dialogue and collaboration among different stakeholders and communities on how to shape the future of AI in a way that respects human values and diversity.

Conclusion

AI systems are transforming our world in many ways, but they also pose many ethical challenges. Aligning AI Systems with diverse human values is an essential step for algorethics. Together, we can balance the benefits and risks of AI systems for humanity and our environment.

I have told you about an exciting opportunity to get involved in shaping the ethical guidelines for AI systems: OpenAI’s program to fund experiments in setting up a democratic process for deciding what rules AI systems should follow. I have also told you about some of the existing proposals and frameworks for ethical guidelines for AI systems that are human-centric.

I hope you enjoyed this post and found it informative. If you have any questions or comments, feel free to leave them below or tweet me at @PaulWagle. And don’t forget to share this post with your friends and followers!